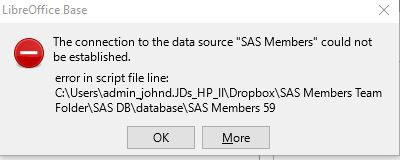

When trying to open the database, an error is displayed stating "the connection to the data source could not be established. Error in Script File line: etc. The error box is below.

In addition, there are two extra files in the Database subfolder; a .data and .properties file both with “conflicted copy” and the user’s name added to the file name. Finally, there was a new subfolder named SAS Members.tmp which was empty. Other than the ‘59’ following the path in the error box, I see little to go on to find the problem, nor do I see a place to repair/change it. Thank you in advance for any help. Joe

Hello again,

Well it seems this answer may not be as easy as some of the others. It appears procedures may have been deviated from.

Simple review. Split DB is in its’ own folder with two sub-folders: database & driver. The driver folder having the the hsqldb.jar (actual DB) and the database folder containing the data, properties & script file - information relative to the data and table structures. The XXX.odb has macros that assist in making the DB portable. These macros also look to see if the database folder exists and if not thinks it is a new split DB. If the database folder is not there or named differently, the macros prompt for a new DB name and getting that creates a new database folder which, based on a few tests, includes another empty XXX.tmp folder. So it seems (just an educated guess) that somewhere along the line something like this happened and possibly files were moved around to try and repair the situation.

Here is what you can try. First work with a copy of what you have. Then in the database folder, remove the two "additional’ files and the XXX.tmp directory. Now start the .odb and if it asks for a DB name cancel the dialog. Now you should be at the main Base screen. From the menu, select Edit->Database->Properties. The top line (General) has the data source info similar to:

hsqldb:file:////home/YOUR_DIRECTORIES/database/YOURDB;default_schema...

Right before the ;default_schema should be the same name of the database files in the database folder. If not change it, select OK & save the .odb (star indicator should be visible on Save icon). Now see if you can select the Tables section.

This is all I have for now based upon the info you have provided.

Thanks for your response. Unfortunately I don’t see a difference in the path and the Properties. I moved everything to a separate location and it behaves with the same connection error. Also, it did not ask for a new DB name. In the error box I get now, it contains the (different) correct path but then adds a ‘space’ and ‘59’ at the end. This is not in Properties. I’ve tried to post the path, but I get a message saying the comment is forbidden.

Here is the Properties path: hsqldb:file:///C:\Users\Joe\Documents\LibreOffice\SAS DB Backup 11-06-17 w-errors\database/SAS Members;default_schema=true;shutdown=true;hsqldb.default_table_type=cached;get_column_name=false

Since posting went back to my test Dropbox and found a couple of “conflicted copy” files there form a few months back. Didn’t have any effect on my opening the DB. The only thing I can think of at this point, if you are willing, is for you to zip up the database directory and post it. If you do this, you may have to change the extension to .odb for the forum to accept the post.

Looks as if you posted while I was commenting. Are you sure of the path? Looks wrong.

...errors\database/SAS Members;default_schema=true.. should be:

...errors\database\SAS Members;default_schema=true..

My current working development copy/directory and a recent backup all have ..errors\database/SAS Members;default_schema=true... I’ll put the folder into a zip and see if I can post it for you to see.

Do you have a suggestion of what to exclude as everything exceeds the size limit. Can I skip the Driver sub directory (it exceeds the size limit by itself). Can I strip out Forms, Reports and queries as the .ODB is over 150% of max. Also, the database.data is also over the limit, and I’m blocked from accessing it. Or, can you suggest a better zip tool than Windows built-in (which hardly zipped it down at all, maybe 10%).

All I was hoping for were the files in the database directory. However, based upon your comment, even the .data file may be too large. Let’s try this. Just post the .script file. Maybe I can figure out something from that. You may have to change the extension from .script to .odb so it will post. I can always change it back.

I was able to compress the database directory (.data, .properties and .script) to quite small. I’ve added it above in the question. I changed the ZIP to .odb so it’ll need changed back. I’ll try the real .odb next. Nope. Can’t get it small enough. I’ll wait for your further direction. Thank you so much for your help.

OK. Got the files. Created new split DB. replaced with your files. Get the error you were getting. Opened .script file with text editor. Line 59 is:

OK. Got the files. Created new split DB. replaced with your files. Get the error you were getting. Opened .script file with text editor. Line 59 is:

SET TABLE PUBLIC."Filter" INDEX '11074 0 1' Appears to be just a Filter table. Deleted this line from .script file, saved the file & opened the .odb. No more error. Able to access the tables (including an empty table named “Filter”). You may want to try this on your copied files and verify some of the data.

Also, I deleted the files from your post.

You are one magic wizard. You did it again. Thank you so much.

Now, any idea what caused this so we can prevent it in the future?

Please, I’m no wizard or anything like that. God gave me the ability to try things in different ways which others may not. When I get results is how I can help others with this gift. It is my pleasure to help.

As far as what caused the problem, not certain at this time. Will try to come up with a solution but have no immediate direction. Sometimes an idea just comes to me to try. Will post if I discover anything.

Added note. I had to insert a zero into the key field of the Filter table. It became NULL after deleting the line from the .script file. Otherwise, all seems well. Thank you again. Standing by for your ideas on above comment.

Update: The “conflicted copy” files are a Dropbox function, so I’ll move there to deal with that. I do wonder if the whole issue wasn’t a result of Dropbox not handling the files correctly as these was only one user active at the time (JohnD). Still don’t understand the cause of the SAS Members.TMP file. Thanks again.

Have seen more on the XXX.tmp folder. This seems to be created each time with this split DB process once tables are accessed. This can be through table view, query or form. Then the .odb is closed the XXX.tmp folder is removed. Don’t know its’ purpose. The “conflicted copy” files seem to generate if you run the .odb right from the Dropbox folder. Not sure why they are generated but those I’ve witnessed don’t seem to affect anything.

One more thing. Since this XXX.tmp folder was still present in your set-up, it may be the .odb wasn’t closed properly. An example may be running the .odb from the Dropbox folder & then the connection is lost. This type of thing may also account for the problem with the Filter table as the data was lost there. Improper close didn’t allow proper table save. Keep in mind this is only speculation. There may be other reasons.

Thank you for the additional information. In looking through your .tmp reasoning, I read that it is possible to actually keep the .tmp file and then see the details of all transactions. Don’t think I’ll do that for now. I think you’re pretty close on the problem/issue and it certainly makes sense. I’ve been looking, but didn’t find, info on the .script file so I can understand what was wrong with the line. Deleting a line for a more complex table might not be so simple to recover.

Not sure where you got info on .tmp directory. My tests show it is always empty. As for tran detains, this is actually in the xxx.log file in the database directory and typically goes away when the .odb is successfully closed. And lastly, you are absolutely right about the .script file. Since it was the Filter table the loss was insignificant. Another table & as you state “might not be so simple to recover”. Haven’t studied detains, but may be pointers into DB where this table resides.