Hello,

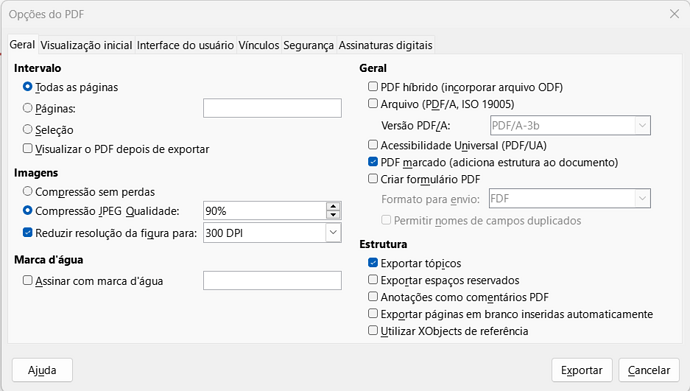

I’ve got a few MS Word files (which I cannot, unfortunately, attach here due to not having rights to it) which I needed to convert into PDF format for usage. The original file is in .docx format, contains few images, and comes in at 2,227,762 bytes. I export to PDF using LibreOffice 7.4.3.2 (x64) — not the latest version, to be sure, but reasonably close — using standard options, except for the addition of a document outline based on the title structure of the original document. Here are the options used:

The resulting document has 19,313,871 bytes (about 8.67 times as large as a PDF). Now, in some cases, one may wind up with a large PDF due to large images which are incorrectly (or not at all) compressed. But this strikes me as unlikely, given that such large images would make the original document large, as well. So I looked for another solution.

I downloaded a python application for PDF size optimization and applied it to the PDF. Below you will find the log:

C:\pdfsizeopt>pdfsizeopt.exe RR109.pdf RRv109-opt.pdf

info: This is pdfsizeopt ZIP rUNKNOWN size=69856.

info: prepending to PATH: C:\pdfsizeopt\pdfsizeopt_win32exec

info: loading PDF from: RR109.pdf

info: loaded PDF of 19313871 bytes

info: separated to 134065 objs + xref + trailer

info: parsed 134065 objs

info: eliminated 614 unused objs, depth=170

info: found 0 Type1 fonts loaded

info: found 0 Type1C fonts loaded

info: will optimize image XObject 44133; orig width=572 height=167 colorspace=/DeviceRGB bpc=8 inv=False filter=/FlateDecode dp=0 size=4946 gs_device=png16m

info: saving PNG to C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.parse.png

info: written 4785 bytes to PNG

info: optimizing 1 images of 4946 bytes in total

info: executing image converter sam2p_np: sam2p -j:quiet -pdf:2 -c zip:1:9 -s Gray1:Indexed1:Gray2:Indexed2:Rgb1:Gray4:Indexed4:Rgb2:Gray8:Indexed8:Rgb4:Rgb8:stop -- C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.parse.png C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-np.pdf

info: loading image from: C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-np.pdf

info: loaded PNG IDAT of 4505 bytes

info: executing image converter sam2p_pr: sam2p -j:quiet -c zip:15:9 -- C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.parse.png C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-pr.png

info: loading image from: C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-pr.png

info: loaded PNG IDAT of 6353 bytes

info: executing image converter pngout: pngout -force C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-pr.png C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.pngout.png

In: 6410 bytes C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-pr.png /c2 /f5

Out: 5908 bytes C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.pngout.png /c2 /f5

Chg: -502 bytes ( 92% of original)

info: loading image from: C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.pngout.png

info: loaded PNG IDAT of 5851 bytes

info: optimized image XObject 44133 file_name=C:\Users\wtrmu\AppData\Local\Temp\psotmp.33668.img-44133.sam2p-np.pdf size=4667 (94%) methods=sam2p_np:4667,parse:4940,#orig:4946,pngout:6063,sam2p_pr:6565

info: saved 279 bytes (6%) on optimizable images

info: optimized 624 streams, kept 41 #orig, 19 uncompressed, 564 zip

info: eliminated 52482 duplicate objs

info: compressed 1 streams, kept 0 of them uncompressed

info: saving PDF with 80969 objs to: RRv109-opt.pdf

info: generated object stream of 1169270 bytes in 80360 objects (7%)

info: generated 4684655 bytes (24%)

As you can see, the input PDF has 19,313,871 bytes distributed among 134065 objects + xref + trailer. Immediately, 614 unused objects are discarded. Then, a single image was compressed from 4,946 bytes to 4,667 bytes (saving 279 bytes). Then it eliminates 52,482 duplicate objects (39% of all objects in the file) and compresses 1 stream, writing the remaining 80,969 objects (1,169,270 bytes) to an optimised PDF file which has 4,684,655 bytes (24% of the original one).

Now, this isn’t properly a bug, since the PDF export routines do generate valid PDFs, but it is certainly a misfeature that those PDFs might have nearly 40% duplicate objects, and have such a structure that an optimiser can slash the size of the file to slightly under a quarter simply by discardinig duplicate objects (and possibly compressing some streams with DEFLATE).

So my question is, what is the procedure to request an improvement to the PDF generation so that the library which LO uses may try to consolidate objects to get its output to a sane size?