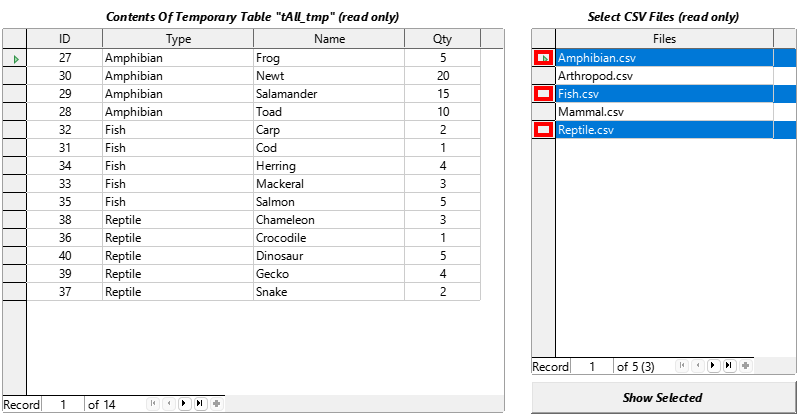

I have a LibreOffice Calc document where I want to do calculations on standardized CSV files all found in the same folder, but I don’t wish to manually import each and every file into Calc. The files will all have the same format, so I don’t want the spreadsheet to care if I remove, swap or add files from one time to the next. I just want all of them to appear in the same sheet.

I am fine if the solution involves linking to a database, if all the same conditions are fulfilled. I already tried and found that with Base I could import all CSV files in a folder, but I found no way of dynamically merging them. The only options I could find involved statically naming each and every table involved.

To be clear, I do NOT want to create a separate sheet for every file like this answer: Multiple csv/ods files into individual tabs in single spreadsheet. The file contents should all appear in one sheet and the contents should change when updated if the original files have been swapped out.