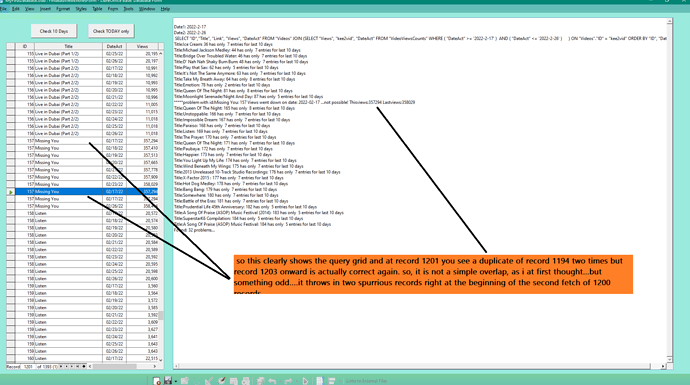

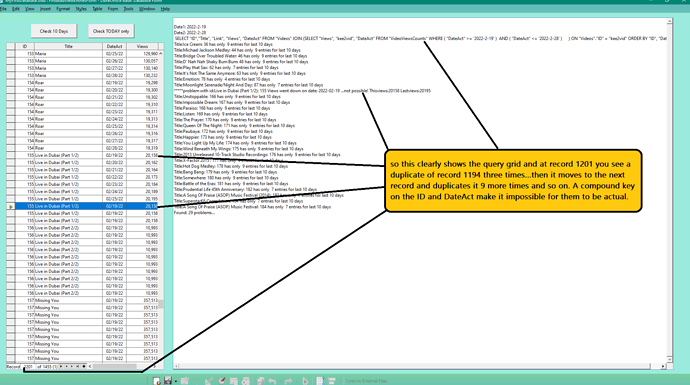

Dealing with a lot of data. Pull it each day, analyze it, categorize it and put it into the database. Was finding it increasingly more difficult to manually validate all of the data…so made some utility routines to run through the data searching for particular errors that have been cropping up. With so much data, so many retrievals…errors happen. But found an error this morning that had me scratching my head. After doing the daily data pull and putting it into the database…i ran the utilities to make sure that no duplicates or omissions occurred. Well, it kept saying there were duplicate records so i physically went though the data table and there were NO duplicates. So here is what i found…my utility routine that searches for duplicates uses a query with a [fetchsize] of 1200 records and throws it into a GRID.

just a brief aside: LO loosely uses the term ‘form’ for a whole lot of different things and is completely befuddling. i use ‘dataset’ or ‘DS’ for the data and ‘GRID’ for the GUI where the user gets to see and work with the DS. It is not actually that simple, but that does make it easier for me to keep straight). In the grid, the first fetch got records 1-1200. Then in the second fetch, it gave me records 1194-1393 (that was the proper end of the data, so no further fetches required. But, note that the second fetch duplicated records 1194-1200 seemingly creating an overlap of data (duplicates). There is no duplication in the actual data because index violation claxons would have started blaring…, but rather, they are only seen in the temporary DS from the query. Is this a bug? i don’t know… i just upped my [fetchsize] to 2000. That will work for now but will have to be pushed larger as the volume of data increases. Or should i NOT set any fetchsize at all and just go with the system’s default? Will that avoid these ghost duplicates?

Could not find a place to upload the pick…and drop and drag was putting it into the middle of my text…so…

You can send it as a PM - private/personal message. Go to my user profile & in upper right corner is Message. Will sen one to you so all you need to do is send response with file.

Only see one. No cannot move between questions. Best you can do is copy one, paste in the other & when satisfied delete the erroneous post (may take a day for delete to happen).

ok…manually copy pasted to the correct thread then deleted from here…

so…you are interested in reproducing the fetchsize problem?

yes…and i was just checking to see if the fetchsize error was still reproduceable. And the results are complicated. It still has the error at record 1201…but additionally it has found a lot of other data entry errors…which will take me some time to verify or refute…

Data has been added since last time so now the error occurs at a different record… and creates many more duplicates now than before…

Better. I will delete some items here to clean it up. Have you seen the PM I sent you earlier?

Sorry, it needs to be less than 1MB

.

Unzip & delete Pictures folder

.

Possible your main issue.

.

Copying to fresh DB is simply one item at a time. Copy table in old, paste in new.

Well…not sure it is worth all that trouble, honestly. But really do value your time and input. Thanks. Prob be talking to you again before long about my next amazing problems…

Oh…just thought of something…i got a discord server account…easy to transfer it to you that way if you have discord installed?

Don’t even know what that is. So probably wont work.

.

.

I’m sure if you tried the unzipping (use a copy) you would see it only takes a few minutes. The copying doesn’t take very long either. Less time than all these messages.

.

But it is up to you.

i just copied it to a fresh new database file… basically same size…odd

Images embedded in tables? Large memo fields? Have a difficult time with data being that large unless a great deal of data. Had over 80K records for a size like that.

it had stayed remarkably small for a long long time…like 374K…but one day i looked at it and it had gotten really big. Only has 1 png file in it…so that is not the problem. no biggie… here is something odd… all the junk code and unused tables and queries and forms that i deleted to make it smaller… hmmmm, Libre put them all right back in…

What about my earlier suggestions - unzip & remove pictures(this may be best bet) or send in pieces?

my zip utility won’t work anymore unless i send money…

and i only have 1 pic in there…and its smallish less than 400k.

Discord is pretty awesome…and i feel sure they have it running on linux. But there is this other free site i can upload it to for transfer…if i can remember the name…

Something you replied to this guy is also connected to my problem. Since i changed the fetchsize to the rowcount and reload then do last first… that destroys my query contents. So i took off the .last .first part…because hitting the last record just sends it into the weeds for some reason. On a dataset its seems fine but not on a query. But, i’ve never noticed this before and wonder if it only happens on certain types of queries… ???

That is an entirely different situation dealing with SQLite3 database and a multi-field key with one of the fields being potentially and intentionally blank.

.

Now if you followed along with that, I believe you would agree that this is not part of your design nor the database you are using.

Right…about all of that but the part about the query getting all weird once you hit the final record in the table is the same…the data looks perfect until you scroll or myobj.last to the final record…then it totally messes with all of the records…changes everything…that is happening to mine as well…

Have not seen query do as you state. It is different from the other post. Still waiting for your sample.