I have a large Calcfile (>20MB) with 5 worksheets, the largest of which has an active array of formulas: 3120 rows and 639 columns. I am using XP and LibreOffice 4.2.0.4. When I try to expand the large array by copying many rows from the top of the array to append them to the bottom, I get odd behavior. After several minutes of apparent inactivity, the file vanishes without any comments or messges. That is, the file no longer appears as an open file. I tried increasing the swap file size to 4GB, but that did not help. Any suggestions?

Please what is you LIbreOffice version?

I am using 4.2.0.4

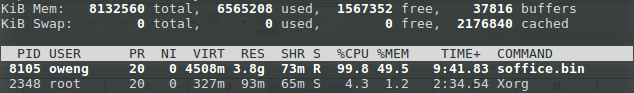

I have performed a basic test here under Crunchbang 11 x86_64 using v4.2.0.4. I created a sheet with 100,000 rows and a different formula in each of 10 columns i.e., 1,000,000 cells in the sheet. I then copied this sheet seven times to create an ODS of ~96 MB containing 8m cells. Saving this file produces these stats:

It does take a while to save / manipulate but all actions were completed successfully. It may be that you are experiencing a problem related either to your particular array / formula use, or something related to RAM usage under WinXP. Can you get another aplication to use the same level of RAM?

Thank you very much for your answer. My guess is the same as yours: that my problem is related to being in theWinXP environment. I do not have another large application to experiment with. However, I will try running the Calc spreadsheet using an Ubuntu system and see if that helps.

By the way, how did you generate the stat report you show in your comment?

@Buzz, the stats are the output from the top command in the terminal. Press q to exit the command. Handy for testing CPU / RAM usage with large files. It may still be an issue with array handling in your case as that one array is ~2m cells, which is quite large. Either way, it will require further testing (e.g., under a different OS as you indicate).

Thanks oweng. I understand that top is a command I can invoke from the LINUX terminal enviroment. I will try that. I tried copying the full 3120 rows of my array of formulas in Calc using Ubuntu 13.107 on LENOVO IDEAPAD 210 with 4MG of RAM. Calc just hung, with the dispay of the spreadsheet turning grey.

My XP runs on a DELL Inspiton 537 with 2GB of RAM.

2GB RAM is probably not going to be enough for an array that size. From memory, I was getting ~1.3GB usage for ~2m cells (2 sheets) of basic formulas (SUM, SIN, COS, LOG, etc.) and that is with the formula memory sharing benefits from v4.2.0. 4GB may even be tight, depending on what else is running on the system.

It looks to me some kind of bug, maybe if RAM is getting full of use and LibreOffice does not check if RAM is still available, so it stays in some strange state. But you know this is going to be difficult to track down and reproduce the problem. In general if you are doing aggressive tasks you need to have a software/hardware suitable for the task.

One more point. When you are starting to ask yourself of the upper limits of some product, then you probably need to ask yourself if this is the most appropriate software to use. Maybe you are in the area when you should start using a database software (e.g. LibreOffice Base) instead of a spreadsheet.

Thanks L-user. If I was still using a work environment avaialble to me before I retied, I would probably program the application in C++ and run it on a main-frame. I have had a lot of expereince with EXCEL, but the ancient version I use on my middle-aged desktop was quickly over-extended. I am still in the learning stages with LibreOffice, but the spreadsheet environemt seems to be a good match, except for apparently unspecified size limitations.

The application is a sumulation of variable load uses of a variable number of resources. The 3120 rows represent one full day of activity from 8am to 9pm in 15 second intervals. Each column repearts the same formula for each row, Most of the columns contain long fornulas involving fairly complex logic - typically nested IF functions up to 8 levels deep.

I had originally planned to calculate the simulation without macros for 20 days for each configurartion of resources to be studied. With the size contraint that the simulation is limited to 1 day, I now plan to write a macro which will iterate the 1 day simulation 20 times, and collect the results as 20 rows of statistics which can be combined to give me the 20 day simulation that I want.