Description

BaseImage: linuxserver/libreoffice:7.6.3

Version: LibreOffice 7.6.3.1 60(Build:1)

CPU: 3000Mi @ 2.50GHz

Memory: 4G

I have a java service that manages libreoffice processes. After the java program is run, LibreOffice will be started through the unoserver service, and then docx will be converted to pdf through script call.

The java program fragment is as follows:

private static void startUnoServer() {

try {

ProcessBuilder processBuilder = new ProcessBuilder("unoserver");

processBuilder.start();

} catch (IOException | InterruptedException e) {

Thread.currentThread().interrupt();

}

}

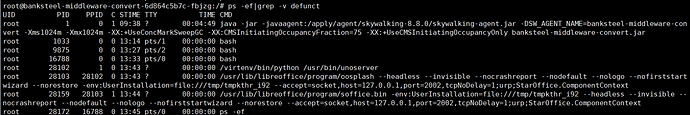

The process within the server is as follows:

At this point you can start file conversion.

question

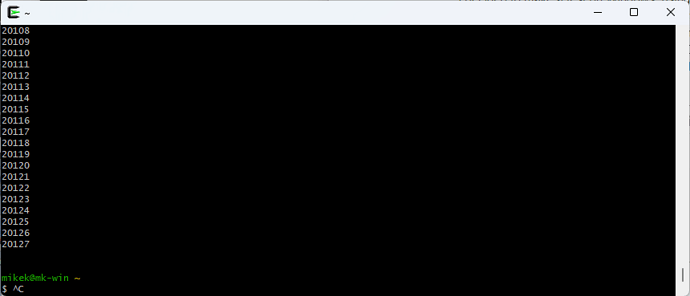

After our testing. Every time about 220 conversions, the conversion process will be stuck. After killing the thread, the next conversion will still be stuck. Only by killing soffice.bin and restarting the libreoffice service can the file continue to be converted. But it will get stuck again around the 220th time.

We have considered the issue of jvm memory, but unfortunately it has nothing to do with it. The jvm memory is set to 1g or 16g, and it still gets stuck after conversion about 220 times.

We have also tried docx files with different contents or different page numbers. No matter the file size is 200kb or 5mb, it will gets stuck about 220 times.

But we discovered something strange:

case Ⅰ: Until it gets stuck 220 times

- The docx file path is

/temp/<32-bit UUID>/<32-bit UUID file name>

case Ⅱ: Until it gets stuck 260 times

- The docx file path is

/temp/a.docx

After changing the file path to one character, the number of conversions increased dozens of times, but it still got stuck.

What can I do to convert docx files stably?