This file has a set of macros to draw a Shape3DPolygonObject, with red lines and green sides. The colors are set in DrawPolygon3D.

DrawPolygon3D.ods (11.0 KB)

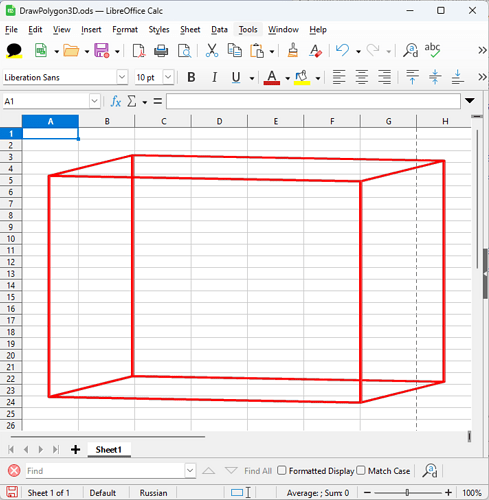

However, running the Main macro gives this:

The line colors are applied correctly, while the sides are transparent.

Editing setPLPoligon, to make the number of polygons 5 (0 to 4) instead of 6, like this:

Function setPLPoligon()

Dim SequenceX1(4), SequenceY1(4), SequenceZ1(4)

SequenceX1(0) = Array( 0, 100, 100, 0, 0)

SequenceY1(0) = Array( 0, 0, 0, 0, 0)

SequenceZ1(0) = Array(100, 100, 0, 0, 100)

SequenceX1(1) = Array( 0, 100, 100, 0, 0)

SequenceY1(1) = Array( 0, 0, 100, 100, 0)

SequenceZ1(1) = Array(100, 100, 100, 100, 100)

SequenceX1(2) = Array( 0, 100, 100, 0, 0)

SequenceY1(2) = Array(100, 100, 100, 100, 100)

SequenceZ1(2) = Array(100, 100, 0, 0, 100)

SequenceX1(3) = Array( 0, 0, 0, 0, 0)

SequenceY1(3) = Array( 0, 100, 100, 0, 0)

SequenceZ1(3) = Array(100, 100, 0, 0, 100)

SequenceX1(4) = Array(100, 100, 100, 100, 100)

SequenceY1(4) = Array( 0, 100, 100, 0, 0)

SequenceZ1(4) = Array(100, 100, 0, 0, 100)

' SequenceX1(5) = Array( 0, 100, 100, 0, 0)

' SequenceY1(5) = Array( 0, 0, 100, 100, 0)

' SequenceZ1(5) = Array( 0, 0, 0, 0, 0)

Dim Sequence As New com.sun.star.drawing.PolyPolygonShape3D

Sequence.SequenceX = SequenceX1

Sequence.SequenceY = SequenceY1

Sequence.SequenceZ = SequenceZ1

setPLPoligon = Sequence

End Function

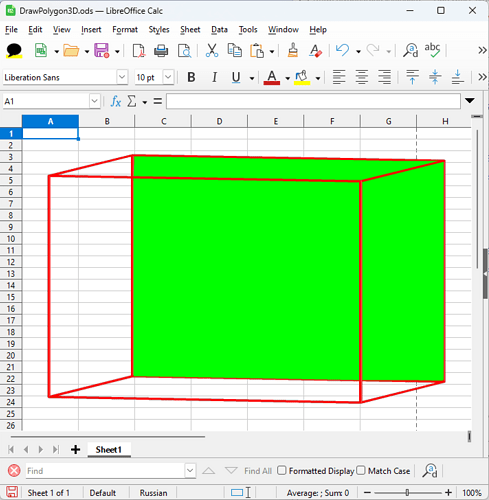

produces this changed result:

So it’s obvious, that the sides are actually colored, but something (orientation?) prevents the intended rendering.

Could someone suggest please, what should be done in the macros, to make the 3D body with all 6 sides green and opaque? @Regina, I am sure you know the answer!  Thank you!

Thank you!